...but it still disgusts me. The hell is wrong with these people!?!?!

"A candidate for the Republican National Committee chairmanship said Friday the CD he sent committee members for Christmas -- which included a song titled "Barack the Magic Negro" -- was clearly intended as a joke."

"According to The Hill, other song titles, some of which were in bold font, were: "John Edwards' Poverty Tour," "Wright place, wrong pastor," "Love Client #9," "Ivory and Ebony" and "The Star Spanglish Banner.""

This man is named Chip Saltsman, a Tennessean imbecile.

Saturday, December 27, 2008

Sunday, November 02, 2008

List of favorite stories.

...some of these are short, some are epic...

this is just a placeholder, a list of some of my favorite stories I've experienced time and time agian.

more to be added as I remember them...

this is just a placeholder, a list of some of my favorite stories I've experienced time and time agian.

- robert on the rocks

- the ballad of david bockman

- operation DTVTAP/Country Mouse

- eric's wisdom teeth

- scotch-tape spot

- you did it

- busking in london

- ryan's pudding

- Ramona Quimby, Age Inappropriate

- Whopper Mile

- Smashing Pumpkins

- Bufo Frog Dandy

- Jeremy pee pants

more to be added as I remember them...

Friday, October 31, 2008

Is Land

I'm back again, this time for Fanfest. Not much I want to ponder on here today after a long day of work and jetlag, other than I'm starting to feel like Reykjavik is my secret home city. I'm beginning to know it pretty well, be able to turn down the right streets, and feel at home with the citizens. I think just being around the language for a while, understanding when smiles are about to come out and when people are actually angry and when they are just passionate (almost ALWAYS passionate). A poetic, hardy people with a fire inside. Great characteristics to aspire to.

I'm back again, this time for Fanfest. Not much I want to ponder on here today after a long day of work and jetlag, other than I'm starting to feel like Reykjavik is my secret home city. I'm beginning to know it pretty well, be able to turn down the right streets, and feel at home with the citizens. I think just being around the language for a while, understanding when smiles are about to come out and when people are actually angry and when they are just passionate (almost ALWAYS passionate). A poetic, hardy people with a fire inside. Great characteristics to aspire to.

Thursday, October 02, 2008

Reading on the Job

My job demands that I do a lot of critical reading. Whether that's e-mails from the marketing department, posts on forums, news interviews or dev blogs. One reason I love my job is that none of this ever gets boring. It is all part of the evolution of games and to a greater extent, entertainment. To an even greater extent it is a study of how humans interact with eachother. Perhaps that last point will be better served for a later blog post.

One thing I'm seeing increasingly more of is some really smart people thinking some really innovative things about gaming. I see more and more pieces like the following one, either in seminars, panels, Op-Ed pieces, blogs, internal e-mail, or design documents. I thought this one particularly interesting and bring it here to you. If you say tl;dr, then I say it would be wasted on you anyways.

Originally published by Steve Gaynor at 03.02AM PST, 10/02/08

http://www.gamasutra.com/php-bin/news_index.php?story=20440

October 2, 2008

Opinion: On Invisibility In Game Design

Opinion: On Invisibility In Game Design [In this in-depth opinion piece, 2K Marin designer Steve Gaynor considers the immersive implications of a video game developer's visible influence on a final product, and argues for greater "invisibility" in design.]

When one is moved by an artist's work, it's sometimes said that the piece 'speaks' to you. Unlike art, games let you speak back to them, and in return, they reply. If the act of playing a video game is akin to carrying on a conversation, then it is the designer of the game with whom the player is conversing, via the game's systems.

In a strange way then, the designer of a video game is himself present as an entity within the work: as the "computer" -- the sum of the mechanics with which the player interacts. The designer is in the value of the shop items you barter for, the speed and cunning your rival racers exhibit, the accuracy of your opponent's guns and the resiliency with which they shrug off your shots, the order of operations with which you must complete a puzzle. The designer determines whether you win or lose, as well as how you play the game. In a sense, the designer resides within the inner workings of all the game's moving parts.

It's a wildly abstract and strangely mediated presence in the work: unlike a writer who puts his own views into words for the audience to read or hear, or the painter who visualizes an image, creates it and presents it to the world, a game designer's role is to express meaning and experiential tenor via potential: what the player may or may not do, as opposed to exactly what he will see, in what order, under which conditions.

This potential creates opportunity-- the opportunity for the player to wield a palette of expressive inputs, in turn drawing out responses from the system, which finally results in an end-user experience that, while composed of a finite set of components, is nonetheless a unique snowflake, distinct from any other player's.

One overlapping consideration of games and the arts is the degree to which the artist or designer reveals evidence of his hand in the final work. In fine art, the role of the artist's hand has long been manipulated and debated: ancient Greek sculptors and Renaissance painters burnished their statuary and delicately glazed their oils to disguise any evidence of the creator's involvement, attempting to create idealized but naturalistic images -- windows to another moment in reality, realistic representations of things otherwise unseeable in an age before photography.

Impressionist artists, followed by the Abstract Expressionists, embraced the artist's presence in the form of raw daubs and splashes of paint, drifting away from or outright opposing representational art in the age of photographic reproduction. Minimalists and Pop artists sought in response to remove the artist's hand from the equation through industrial fabrication techniques and impersonal commercial printing methods, returning the focus to the image itself, as a way of questioning the validity of personal and emotional artistic themes in the modern age.

The designer's presence in a video game might be similarly modulated, to a variety of ends. If a designer lives in the rules of the gameworld, then it is the player's conscious knowledge of the game's ruleset that exposes evidence of his hand.

Take for instance a game like Tetris. Tetris is almost nothing but its rules: its presentation is the starkest visualization of its current system state; it features no fictional wrapper or personified elements; any meaning it exudes or emotions it fosters are expressed entirely through the player's dialogue with its intensely spare ruleset.

The game might speak to any number of themes -- anxiety, Sisyphean futility, the randomness of an uncaring universe -- and it does so only through an abstract, concrete and wholly transparent set of rules. The player is fully conscious of the game's rules and is in dialogue only with them -- and thereby with the designer, Alexey Pajitnov -- at all times when playing Tetris.

While the game's presentation is artistically minimalist, the design itself is integrally formalist. But whereas formalism in the fine arts is meant to exclude the artist's persona from interpretation of the work, a formalist video game consists only of its exposed ruleset, and thereby functions purely as a dialogue with the designer of those rules.

Embracing this abstract formalist approach requires the designer to let go of naturalistic simulation, but allows the most direct connection between designer and player: a pure conduit for ideas to be expressed through rules and states.

Alternately, the designer's hand is least evident when players are wholly unconscious of the gameworld's underlying ruleset. I don't mean here abstract formalist designs wherein the mechanics are intentionally obscured -- in that case, "the player cannot easily obtain knowledge of the rules" is simply another rule.

Rather, I refer to "immersive simulations" -- games that attempt to utilize the rules of our own world as fully as possible, presenting clearly discernible affordances and supplying the player with appropriate inputs to interact with the gameworld as he might the real world. The ultimate node on this design progression would be the experience of The Matrix or Star Trek's holodeck -- a simulated world that for all intents and purposes functions identically to our own.

This approach to game design bears most in common with Renaissance artists' attempts to precisely model reality through painting, to much the same ends: an illusionistically convincing work which might 'trick' the viewer into mistaking the frame (of the painting or the monitor) for a window into an alternate viewpoint on our own reality.

However, where Renaissance artists needed to model our world visually, designers of immersive simulations strive to model our world functionally. This utilization of an underlying ruleset that is unconsciously understood by the player allows the work of the designer to remain invisible, setting up the game as a more perfect stage for others' endeavors-- the player's self-expression, and the writer's and visual artist's craft-- as well as presenting a more perfectly transparent lens through which the game's alternate reality may be viewed.

Every time the player is confronted with overt rules that they must acknowledge consciously, the lens is smudged, the stage eroded; at every point that a simulated experience deviates from the Holodeck ideal, the designer's hand is exposed to the player, drawing attention away from the world as a believable place, and onto the limitations of an artificial set of concrete rules governing the experience.

Clearly, the ideal, virtual reality version of "being there" is impossible with current technology. Tech will progress in time; the question is, how do current design conventions unintentionally draw the designer's hand into the fore, sullying the immersiveness of the end-user experience?

One common pitfall might be an over-reliance on a Hollywood-derived linear progression structure, which in turn confronts the player with a succession of mechanical conditions they must fulfill to proceed. If I, as a player, must defeat the boss, or pull the bathysphere lever, or slide down the flagpole to progress from level 1 to level 2, then I understand the world in a limited, artificial way.

Space doesn't exist as a line, nor are our lives composed of a linear sequence of deterministic events; when our gameworlds are arranged this way, the player must be challenged to satisfy their arbitrary win conditions, which in turn requires that they understand the limited rules which constrain the experience.

The designer's role is dictatorial, telling the player "here are the conditions that I've decided you must satisfy." The player's inputs test against these pre-determined conditions until they are fulfilled, at which point the designer allows the player to progress. Within this structure, the designer's hand looks something like the following:

Creating games without a linear progression structure, and therefore without overt, challenge-based gating goals, allows the player to inhabit the space with a rhythm that better mirrors their own life's than a movie's pacing, as opposed to focusing on artificial pinchpoints that cinch the gameworld's possibility space into a straight line.

Another offending convention might be a question of where the game's control scheme lives. In character-driven games, the player's inputs most commonly reside in the controller itself, requiring the player to memorize which button does what. The simple fact that the player can only perform actions which are mapped to controller buttons confronts them with the limitations of their role within the world; the player-character is not a "real person" but a tiny bundle of verbs wandering around the world.

Run, jump, punch, shoot, gas, brake, and occasionally a more nuanced context action when they stand in the right spot-- these are the extent of the player's agency. More pointedly, having to memorize button mapping is a ruleset itself, and one that pulls players out of the experience. "How do I jump?" "What does the B button do?" These are concerns that distract from the experience of being there.

Alternatively, the game's control scheme might live largely within the simulation itself. If the player's possible interactions lived within the objects in the gameworld instead of within the control pad, the player's range of interactions would only be limited by the extent to which the designer supported them, as opposed to the number of buttons on the controller.

Likewise, the more interactions that are drawn out of the gameworld itself, as opposed to being fired into it by the player, the more immersed the player is in the experience of being there, as opposed to the mastery of an ornate control scheme. This control philosophy does not support many games that rely on quick reflexes and life-or-death situations, but perhaps that isn't such a bad thing. One need only look at the success of The Sims and extrapolate its control philosophy outward: each object in the world is filled with unique interactions, resulting in seemingly endless possibilities spread out before the player.

A related convention that unduly exposes a game's underlying mechanics results from our need to communicate the player-character's physical state to the player. In many genre games, the player must know his character's current level of health, stamina, and so forth. In real life, one is simply aware of their own physical state; however, since games must communicate relevant information almost entirely through the visuals, we end up with health bars, numerical hitpoint readouts, and pulsing red screen overlays to communicate physical state.

The player then is less concerned with their character being "hurt" or "in pain" as with their being "damaged," like a car or a toy. The rules become transparent: when I lose all my hit points I die; when I use a health kit I recover a certain percentage of my hit points; I am a box of numbers, as opposed to a real person in a real place.

Similar to the prior point, the hitpoint problem presents a limitation native to game genres which rely on combat and life-or-death situations as their core conflicts, as opposed to implying an insurmountable limitation of the medium as a whole. If I am not in danger of being shot, stabbed, bitten or crushed, then I am free to relate to my player-character in human terms instead of numerical status, thus remaining unconscious of the designer's hand.

All this isn't to say that downplaying the designer's hand is an inherently superior design philosophy; clearly, many of us connect deeply with the conscious interaction between player and machine. But as our industry rides a wave of visual fidelity ever forward, our reliance on game genres tied to the assimilation of concrete rulesets only deepens the schism between player expectations and simulational veracity.

It's been posited that games are poised to enter a golden age -- a renaissance, one might say -- and as designers, we might do well to step out of the spotlight, stop obscuring the lens into our simulated worlds, and embrace the virtues of invisibility.

One thing I'm seeing increasingly more of is some really smart people thinking some really innovative things about gaming. I see more and more pieces like the following one, either in seminars, panels, Op-Ed pieces, blogs, internal e-mail, or design documents. I thought this one particularly interesting and bring it here to you. If you say tl;dr, then I say it would be wasted on you anyways.

Originally published by Steve Gaynor at 03.02AM PST, 10/02/08

http://www.gamasutra.com/php-bin/news_index.php?story=20440

October 2, 2008

Opinion: On Invisibility In Game Design

Opinion: On Invisibility In Game Design [In this in-depth opinion piece, 2K Marin designer Steve Gaynor considers the immersive implications of a video game developer's visible influence on a final product, and argues for greater "invisibility" in design.]

When one is moved by an artist's work, it's sometimes said that the piece 'speaks' to you. Unlike art, games let you speak back to them, and in return, they reply. If the act of playing a video game is akin to carrying on a conversation, then it is the designer of the game with whom the player is conversing, via the game's systems.

In a strange way then, the designer of a video game is himself present as an entity within the work: as the "computer" -- the sum of the mechanics with which the player interacts. The designer is in the value of the shop items you barter for, the speed and cunning your rival racers exhibit, the accuracy of your opponent's guns and the resiliency with which they shrug off your shots, the order of operations with which you must complete a puzzle. The designer determines whether you win or lose, as well as how you play the game. In a sense, the designer resides within the inner workings of all the game's moving parts.

It's a wildly abstract and strangely mediated presence in the work: unlike a writer who puts his own views into words for the audience to read or hear, or the painter who visualizes an image, creates it and presents it to the world, a game designer's role is to express meaning and experiential tenor via potential: what the player may or may not do, as opposed to exactly what he will see, in what order, under which conditions.

This potential creates opportunity-- the opportunity for the player to wield a palette of expressive inputs, in turn drawing out responses from the system, which finally results in an end-user experience that, while composed of a finite set of components, is nonetheless a unique snowflake, distinct from any other player's.

One overlapping consideration of games and the arts is the degree to which the artist or designer reveals evidence of his hand in the final work. In fine art, the role of the artist's hand has long been manipulated and debated: ancient Greek sculptors and Renaissance painters burnished their statuary and delicately glazed their oils to disguise any evidence of the creator's involvement, attempting to create idealized but naturalistic images -- windows to another moment in reality, realistic representations of things otherwise unseeable in an age before photography.

Impressionist artists, followed by the Abstract Expressionists, embraced the artist's presence in the form of raw daubs and splashes of paint, drifting away from or outright opposing representational art in the age of photographic reproduction. Minimalists and Pop artists sought in response to remove the artist's hand from the equation through industrial fabrication techniques and impersonal commercial printing methods, returning the focus to the image itself, as a way of questioning the validity of personal and emotional artistic themes in the modern age.

The designer's presence in a video game might be similarly modulated, to a variety of ends. If a designer lives in the rules of the gameworld, then it is the player's conscious knowledge of the game's ruleset that exposes evidence of his hand.

Take for instance a game like Tetris. Tetris is almost nothing but its rules: its presentation is the starkest visualization of its current system state; it features no fictional wrapper or personified elements; any meaning it exudes or emotions it fosters are expressed entirely through the player's dialogue with its intensely spare ruleset.

The game might speak to any number of themes -- anxiety, Sisyphean futility, the randomness of an uncaring universe -- and it does so only through an abstract, concrete and wholly transparent set of rules. The player is fully conscious of the game's rules and is in dialogue only with them -- and thereby with the designer, Alexey Pajitnov -- at all times when playing Tetris.

While the game's presentation is artistically minimalist, the design itself is integrally formalist. But whereas formalism in the fine arts is meant to exclude the artist's persona from interpretation of the work, a formalist video game consists only of its exposed ruleset, and thereby functions purely as a dialogue with the designer of those rules.

Embracing this abstract formalist approach requires the designer to let go of naturalistic simulation, but allows the most direct connection between designer and player: a pure conduit for ideas to be expressed through rules and states.

Alternately, the designer's hand is least evident when players are wholly unconscious of the gameworld's underlying ruleset. I don't mean here abstract formalist designs wherein the mechanics are intentionally obscured -- in that case, "the player cannot easily obtain knowledge of the rules" is simply another rule.

Rather, I refer to "immersive simulations" -- games that attempt to utilize the rules of our own world as fully as possible, presenting clearly discernible affordances and supplying the player with appropriate inputs to interact with the gameworld as he might the real world. The ultimate node on this design progression would be the experience of The Matrix or Star Trek's holodeck -- a simulated world that for all intents and purposes functions identically to our own.

This approach to game design bears most in common with Renaissance artists' attempts to precisely model reality through painting, to much the same ends: an illusionistically convincing work which might 'trick' the viewer into mistaking the frame (of the painting or the monitor) for a window into an alternate viewpoint on our own reality.

However, where Renaissance artists needed to model our world visually, designers of immersive simulations strive to model our world functionally. This utilization of an underlying ruleset that is unconsciously understood by the player allows the work of the designer to remain invisible, setting up the game as a more perfect stage for others' endeavors-- the player's self-expression, and the writer's and visual artist's craft-- as well as presenting a more perfectly transparent lens through which the game's alternate reality may be viewed.

Every time the player is confronted with overt rules that they must acknowledge consciously, the lens is smudged, the stage eroded; at every point that a simulated experience deviates from the Holodeck ideal, the designer's hand is exposed to the player, drawing attention away from the world as a believable place, and onto the limitations of an artificial set of concrete rules governing the experience.

Clearly, the ideal, virtual reality version of "being there" is impossible with current technology. Tech will progress in time; the question is, how do current design conventions unintentionally draw the designer's hand into the fore, sullying the immersiveness of the end-user experience?

One common pitfall might be an over-reliance on a Hollywood-derived linear progression structure, which in turn confronts the player with a succession of mechanical conditions they must fulfill to proceed. If I, as a player, must defeat the boss, or pull the bathysphere lever, or slide down the flagpole to progress from level 1 to level 2, then I understand the world in a limited, artificial way.

Space doesn't exist as a line, nor are our lives composed of a linear sequence of deterministic events; when our gameworlds are arranged this way, the player must be challenged to satisfy their arbitrary win conditions, which in turn requires that they understand the limited rules which constrain the experience.

The designer's role is dictatorial, telling the player "here are the conditions that I've decided you must satisfy." The player's inputs test against these pre-determined conditions until they are fulfilled, at which point the designer allows the player to progress. Within this structure, the designer's hand looks something like the following:

Creating games without a linear progression structure, and therefore without overt, challenge-based gating goals, allows the player to inhabit the space with a rhythm that better mirrors their own life's than a movie's pacing, as opposed to focusing on artificial pinchpoints that cinch the gameworld's possibility space into a straight line.

Another offending convention might be a question of where the game's control scheme lives. In character-driven games, the player's inputs most commonly reside in the controller itself, requiring the player to memorize which button does what. The simple fact that the player can only perform actions which are mapped to controller buttons confronts them with the limitations of their role within the world; the player-character is not a "real person" but a tiny bundle of verbs wandering around the world.

Run, jump, punch, shoot, gas, brake, and occasionally a more nuanced context action when they stand in the right spot-- these are the extent of the player's agency. More pointedly, having to memorize button mapping is a ruleset itself, and one that pulls players out of the experience. "How do I jump?" "What does the B button do?" These are concerns that distract from the experience of being there.

Alternatively, the game's control scheme might live largely within the simulation itself. If the player's possible interactions lived within the objects in the gameworld instead of within the control pad, the player's range of interactions would only be limited by the extent to which the designer supported them, as opposed to the number of buttons on the controller.

Likewise, the more interactions that are drawn out of the gameworld itself, as opposed to being fired into it by the player, the more immersed the player is in the experience of being there, as opposed to the mastery of an ornate control scheme. This control philosophy does not support many games that rely on quick reflexes and life-or-death situations, but perhaps that isn't such a bad thing. One need only look at the success of The Sims and extrapolate its control philosophy outward: each object in the world is filled with unique interactions, resulting in seemingly endless possibilities spread out before the player.

A related convention that unduly exposes a game's underlying mechanics results from our need to communicate the player-character's physical state to the player. In many genre games, the player must know his character's current level of health, stamina, and so forth. In real life, one is simply aware of their own physical state; however, since games must communicate relevant information almost entirely through the visuals, we end up with health bars, numerical hitpoint readouts, and pulsing red screen overlays to communicate physical state.

The player then is less concerned with their character being "hurt" or "in pain" as with their being "damaged," like a car or a toy. The rules become transparent: when I lose all my hit points I die; when I use a health kit I recover a certain percentage of my hit points; I am a box of numbers, as opposed to a real person in a real place.

Similar to the prior point, the hitpoint problem presents a limitation native to game genres which rely on combat and life-or-death situations as their core conflicts, as opposed to implying an insurmountable limitation of the medium as a whole. If I am not in danger of being shot, stabbed, bitten or crushed, then I am free to relate to my player-character in human terms instead of numerical status, thus remaining unconscious of the designer's hand.

All this isn't to say that downplaying the designer's hand is an inherently superior design philosophy; clearly, many of us connect deeply with the conscious interaction between player and machine. But as our industry rides a wave of visual fidelity ever forward, our reliance on game genres tied to the assimilation of concrete rulesets only deepens the schism between player expectations and simulational veracity.

It's been posited that games are poised to enter a golden age -- a renaissance, one might say -- and as designers, we might do well to step out of the spotlight, stop obscuring the lens into our simulated worlds, and embrace the virtues of invisibility.

Friday, September 26, 2008

Yeats and A Heartbeat Away

Take a look at this

Then consider this number: 700 Billion

And now read this, by William Butler Yeats:

Turning and turning in the widening gyre

The falcon cannot hear the falconer;

Things fall apart; the center cannot hold;

Mere anarchy is loosed upon the world,

The blood-dimmed tide is loosed, and everywhere

The ceremony of innocence is drowned;

The best lack all conviction, while the worst

Are full of passionate intensity.

Surely some revelation is at hand;

Surely the Second Coming is at hand.

The Second Coming! Hardly are those words out

When a vast image out of Spiritus Mundi

Troubles my sight: somewhere in sands of the desert

A shape with lion body and the head of a man,

A gaze blank and pitiless as the sun,

Is moving its slow thighs, while all about it

Reel shadows of the indignant desert birds.

The darkness drops again; but now I know

That twenty centuries of stony sleep

Were vexed to nightmare by a rocking cradle,

And what rough beast, its hour come round at last,

Slouches towards Bethlehem to be born?

On a personal level I find myself mirroring America in strange ways.

Times indeed my friends. Times.

Then consider this number: 700 Billion

And now read this, by William Butler Yeats:

Turning and turning in the widening gyre

The falcon cannot hear the falconer;

Things fall apart; the center cannot hold;

Mere anarchy is loosed upon the world,

The blood-dimmed tide is loosed, and everywhere

The ceremony of innocence is drowned;

The best lack all conviction, while the worst

Are full of passionate intensity.

Surely some revelation is at hand;

Surely the Second Coming is at hand.

The Second Coming! Hardly are those words out

When a vast image out of Spiritus Mundi

Troubles my sight: somewhere in sands of the desert

A shape with lion body and the head of a man,

A gaze blank and pitiless as the sun,

Is moving its slow thighs, while all about it

Reel shadows of the indignant desert birds.

The darkness drops again; but now I know

That twenty centuries of stony sleep

Were vexed to nightmare by a rocking cradle,

And what rough beast, its hour come round at last,

Slouches towards Bethlehem to be born?

On a personal level I find myself mirroring America in strange ways.

Times indeed my friends. Times.

Thursday, September 11, 2008

Are you taking the paper?

Perhaps my luck is ridiculous. Perhaps the accumulated karma from years of my mom asking me if I "take the paper" (while insinuating that I might be missing something or am somehow socially inept because I am not culturally exposed) has pushed me towards a brush with fate.

Context: While waiting for several work friends at a local watering hole on a dark and dreary night, I found a discarded newspaper to my left. Unwilling to bend my attention to FoxNews upon the TV screens provided at a social gathering place, my only natural choice was to explore this foreign and oft-avoided medium.

The third thing I read was brilliant. I consider myself a lottery winner.

I am reprinting it here as I believe the thoughts therein to be both poignant and well, freakin' spot-on.

Brooks: Lord of the Memes

By David Brooks

Published: August 8, 2008

Dear Dr. Kierkegaard,

All my life I've been a successful pseudo-intellectual, sprinkling quotations from Kafka, Epictetus and Derrida into my conversations, impressing dates and making my friends feel mentally inferior. But over the last few years, it's stopped working. People just look at me blankly. My artificially inflated self-esteem is on the wane. What happened?

Existential in Exeter

Dear Existential,

It pains me to see so many people being pseudo-intellectual in the wrong way. It desecrates the memory of the great poseurs of the past. And it is all the more frustrating because your error is so simple and yet so fundamental.

You have failed to keep pace with the current code of intellectual one-upsmanship. You have failed to appreciate that over the past few years, there has been a tectonic shift in the basis of good taste.

You must remember that there have been three epochs of intellectual affectation. The first, lasting from approximately 1400 to 1965, was the great age of snobbery. Cultural artifacts existed in a hierarchy, with opera and fine art at the top, and stripping at the bottom. The social climbing pseud merely had to familiarize himself with the forms at the top of the hierarchy and febrile acolytes would perch at his feet.

In 1960, for example, he merely had to follow the code of high modernism. He would master some impenetrably difficult work of art from T.S. Eliot or Ezra Pound and then brood contemplatively at parties about Lionel Trilling's misinterpretation of it. A successful date might consist of going to a reading of "The Waste Land," contemplating the hollowness of the human condition and then going home to drink Russian vodka and suck on the gas pipe.

This code died sometime in the late 1960s and was replaced by the code of the Higher Eclectica. The old hierarchy of the arts was dismissed as hopelessly reactionary. Instead, any cultural artifact produced by a member of a colonially oppressed out-group was deemed artistically and intellectually superior.

During this period, status rewards went to the ostentatious cultural omnivores - those who could publicly savor an infinite range of historically hegemonized cultural products. It was necessary to have a record collection that contained "a little bit of everything" (except heavy metal): bluegrass, rap, world music, salsa and Gregorian chant. It was useful to decorate one's living room with African or Thai religious totems - any religion so long as it was one you could not conceivably believe in.

But on or about June 29, 2007, human character changed. That, of course, was the release date of the first iPhone.

On that date, media displaced culture. As commenters on The American Scene blog have pointed out, the means of transmission replaced the content of culture as the center of historical excitement and as the marker of social status.

Now the global thought-leader is defined less by what culture he enjoys than by the smartphone, social bookmarking site, social network and e-mail provider he uses to store and transmit it. (In this era, MySpace is the new leisure suit and an AOL e-mail address is a scarlet letter of techno-shame.)

Today, Kindle can change the world, but nobody expects much from a mere novel. The brain overshadows the mind. Design overshadows art.

This transition has produced some new status rules. In the first place, prestige has shifted from the producer of art to the aggregator and the appraiser. Inventors, artists and writers come and go, but buzz is forever. Maximum status goes to the Gladwellian heroes who occupy the convergence points of the Internet infosystem - Web sites like Pitchfork for music, Gizmodo for gadgets, Bookforum for ideas, etc.

These tastemakers surf the obscure niches of the culture market bringing back fashion-forward nuggets of coolness for their throngs of grateful disciples.

Second, in order to cement your status in the cultural elite, you want to be already sick of everything no one else has even heard of.

When you first come across some obscure cultural artifact - an unknown indie band, organic skate sneakers or wireless headphones from Finland - you will want to erupt with ecstatic enthusiasm. This will highlight the importance of your cultural discovery, the fineness of your discerning taste, and your early adopter insiderness for having found it before anyone else.

Then, a few weeks later, after the object is slightly better known, you will dismiss all the hype with a gesture of putrid disgust. This will demonstrate your lofty superiority to the sluggish masses. It will show how far ahead of the crowd you are and how distantly you have already ventured into the future.

If you can do this, becoming not only an early adopter, but an early discarder, you will realize greater status rewards than you ever imagined. Remember, cultural epochs come and go, but one-upsmanship is forever.

Context: While waiting for several work friends at a local watering hole on a dark and dreary night, I found a discarded newspaper to my left. Unwilling to bend my attention to FoxNews upon the TV screens provided at a social gathering place, my only natural choice was to explore this foreign and oft-avoided medium.

The third thing I read was brilliant. I consider myself a lottery winner.

I am reprinting it here as I believe the thoughts therein to be both poignant and well, freakin' spot-on.

Brooks: Lord of the Memes

By David Brooks

Published: August 8, 2008

Dear Dr. Kierkegaard,

All my life I've been a successful pseudo-intellectual, sprinkling quotations from Kafka, Epictetus and Derrida into my conversations, impressing dates and making my friends feel mentally inferior. But over the last few years, it's stopped working. People just look at me blankly. My artificially inflated self-esteem is on the wane. What happened?

Existential in Exeter

Dear Existential,

It pains me to see so many people being pseudo-intellectual in the wrong way. It desecrates the memory of the great poseurs of the past. And it is all the more frustrating because your error is so simple and yet so fundamental.

You have failed to keep pace with the current code of intellectual one-upsmanship. You have failed to appreciate that over the past few years, there has been a tectonic shift in the basis of good taste.

You must remember that there have been three epochs of intellectual affectation. The first, lasting from approximately 1400 to 1965, was the great age of snobbery. Cultural artifacts existed in a hierarchy, with opera and fine art at the top, and stripping at the bottom. The social climbing pseud merely had to familiarize himself with the forms at the top of the hierarchy and febrile acolytes would perch at his feet.

In 1960, for example, he merely had to follow the code of high modernism. He would master some impenetrably difficult work of art from T.S. Eliot or Ezra Pound and then brood contemplatively at parties about Lionel Trilling's misinterpretation of it. A successful date might consist of going to a reading of "The Waste Land," contemplating the hollowness of the human condition and then going home to drink Russian vodka and suck on the gas pipe.

This code died sometime in the late 1960s and was replaced by the code of the Higher Eclectica. The old hierarchy of the arts was dismissed as hopelessly reactionary. Instead, any cultural artifact produced by a member of a colonially oppressed out-group was deemed artistically and intellectually superior.

During this period, status rewards went to the ostentatious cultural omnivores - those who could publicly savor an infinite range of historically hegemonized cultural products. It was necessary to have a record collection that contained "a little bit of everything" (except heavy metal): bluegrass, rap, world music, salsa and Gregorian chant. It was useful to decorate one's living room with African or Thai religious totems - any religion so long as it was one you could not conceivably believe in.

But on or about June 29, 2007, human character changed. That, of course, was the release date of the first iPhone.

On that date, media displaced culture. As commenters on The American Scene blog have pointed out, the means of transmission replaced the content of culture as the center of historical excitement and as the marker of social status.

Now the global thought-leader is defined less by what culture he enjoys than by the smartphone, social bookmarking site, social network and e-mail provider he uses to store and transmit it. (In this era, MySpace is the new leisure suit and an AOL e-mail address is a scarlet letter of techno-shame.)

Today, Kindle can change the world, but nobody expects much from a mere novel. The brain overshadows the mind. Design overshadows art.

This transition has produced some new status rules. In the first place, prestige has shifted from the producer of art to the aggregator and the appraiser. Inventors, artists and writers come and go, but buzz is forever. Maximum status goes to the Gladwellian heroes who occupy the convergence points of the Internet infosystem - Web sites like Pitchfork for music, Gizmodo for gadgets, Bookforum for ideas, etc.

These tastemakers surf the obscure niches of the culture market bringing back fashion-forward nuggets of coolness for their throngs of grateful disciples.

Second, in order to cement your status in the cultural elite, you want to be already sick of everything no one else has even heard of.

When you first come across some obscure cultural artifact - an unknown indie band, organic skate sneakers or wireless headphones from Finland - you will want to erupt with ecstatic enthusiasm. This will highlight the importance of your cultural discovery, the fineness of your discerning taste, and your early adopter insiderness for having found it before anyone else.

Then, a few weeks later, after the object is slightly better known, you will dismiss all the hype with a gesture of putrid disgust. This will demonstrate your lofty superiority to the sluggish masses. It will show how far ahead of the crowd you are and how distantly you have already ventured into the future.

If you can do this, becoming not only an early adopter, but an early discarder, you will realize greater status rewards than you ever imagined. Remember, cultural epochs come and go, but one-upsmanship is forever.

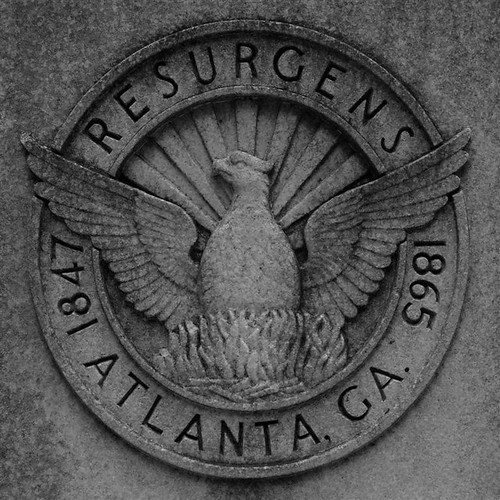

Re:

It is time that Renaissance Tan has undergone some changes. Not that it is stale, or outdated or the posts therein have gone rancid. It's more that the Ishmael behind them found another whale to survive. A long Odyssey has ended.

My good friend, incredible and inspiring author Will Hindmarch, wrote the following to me recently in a time of great need:

"Also, blog. Or write a short story. Something. You're too good a writer to not be doing it. Seriously."

I've had that advice before. From a loving mother. From friends. From inspiring teachers.

Perhaps it takes a sharp turn for things to really sink in. For self-realization to be a bit more obvious. For self-expression to be important.

So here I am. Revamped. Rededicated and resolute. There's a lot of "re" in me now and I intend to exploit that.

My good friend, incredible and inspiring author Will Hindmarch, wrote the following to me recently in a time of great need:

"Also, blog. Or write a short story. Something. You're too good a writer to not be doing it. Seriously."

I've had that advice before. From a loving mother. From friends. From inspiring teachers.

Perhaps it takes a sharp turn for things to really sink in. For self-realization to be a bit more obvious. For self-expression to be important.

So here I am. Revamped. Rededicated and resolute. There's a lot of "re" in me now and I intend to exploit that.

Φοῖνιξ

Monday, September 08, 2008

Sunday, August 10, 2008

Wednesday, June 25, 2008

Russian Attack!

Saturday, June 14, 2008

Icelandic Odyssey

Sunday, May 18, 2008

Is this unpatriotic?

Well gee, thanks Uncle Sam! I'd like to fully love my country too. When I was a kid, I pretty early on recognized that I'd rather have been born here than anywhere else in the world. I felt honored to come into what was one of the most free and accepting societies that there ever has been, where the individual is mostly allowed to find their own way . Growing up I kept that love, even though hints of iniquity started to shine through from a past I had not previously witnessed until I encountered them in history class. Slavery, Civil Rights: "mostly" righted but still present. At least we're headed in the right direction.

Well gee, thanks Uncle Sam! I'd like to fully love my country too. When I was a kid, I pretty early on recognized that I'd rather have been born here than anywhere else in the world. I felt honored to come into what was one of the most free and accepting societies that there ever has been, where the individual is mostly allowed to find their own way . Growing up I kept that love, even though hints of iniquity started to shine through from a past I had not previously witnessed until I encountered them in history class. Slavery, Civil Rights: "mostly" righted but still present. At least we're headed in the right direction.While only tangentially studied American Military History via my other historical pursuits and a lot of late nights watching the History Channel, there have been few people on this earth that I am in awe of more than the common soldier. From the musket-wielders to those trudging through the beaches of Normandy. From those pressed into the thick jungles of Asia to those now fighting in penance of an arrogant president. There's is to jump, headfirst, into situations I would have nightmares about. To be a proxy for their friends and family, their towns and counties, their states and country, so that others do not have to die. They are a spiritual manifestation of the will of the country and the physical manifestation that gets it done.

"What the Iraqi fighter found threatened America's vital alliance with Sunni militia.

A week ago in a police station shooting range on Baghdad's western outskirts, the American-allied Iraqi militiaman found what one or more GIs had been using for target practice -- a copy of the Quran, Islam's holy book.

Riddled with bullets, the rounds piercing deep into the thick volume, the pages were shredded. Turning the holy book in his hands, the man found two handwritten English words, scrawled in pen. "F*** yeah.""

I try not to let one bad apple spoil the basket, but when it is a symptom of a greater problem--that still after how many years the average soldier isn't instructed on proper keeping of the Quran, how important it is to remember that you're not only killing Muslims ("evil terrorists") but also working with them ("freedom loving police") or any damn thing about Abu Ghraib?!I've seen video and voice recording of the soldiers in Iraq, of their "F*** Yeah" attitude, blaring death metal while shooting 50 calibers into buildings, unapologetic hatred for the enemy--all of course normal in such extreme circumstance. However, this is yet another example of complete lack of leadership and personal responsibility. Another shovelful of dirt out of the grave plot I might be forced to bury my patriotism in. This is not the soldier that I can or should believe in, and it saddens me that a position of such honor, that carries such a burden, could sully itself--and that those who command it allowed it to happen in the first place.

Of course, the story I quoted from today's CNN.com piece has a turn for the better, as the religious leaders in the surrounding town were gracious enough, wise enough and loving enough to accept a humble apology from the commanding officer when they could have very easily demanded retribution. It was a scene not unlike many before it, where the sins of the few were forgiven by the few and the world is better for it.

Friday, April 11, 2008

Wednesday, March 26, 2008

Invention by deletion.

I'm not a huge fan of just direct linking other people's ideas that I run across through my daily RSS feeds here. That's what shared Google Reader is for.

However, the idea behind "Garfield without Garfield" fascinates me. It is the true genius of Jim Davis--because, face it, Garfield him/herself has never broken the humor threshold.

It is the hidden story of Jon Arbuckle, which the "re-creator" puts perfectly:

Who would have guessed that when you remove Garfield from the Garfield comic strips, the result is an even better comic about schizophrenia, bipolar disorder, and the empty desperation of modern life? Friends, meet Jon Arbuckle [Special props to those who "get" this link reference"]. Let’s laugh and learn with him on a journey deep into the tortured mind of an isolated young everyman as he fights a losing battle against loneliness in a quiet American suburb.

Thursday, March 20, 2008

The Good Kind of B.O.

So the last few of my admittedly-few-and-far-between blog posts have been about Barack Obama. I deleted them because I hate repetition in writing. This blog isn't only about politics, even if it is what I've felt moved to write about recently.

The first Obamapost detailed my excitement over him winning Iowa and my humble pleading of Iowa to forgive me for so diligently making fun of it and its Midwestern neighbors from my East Coast Ivory Tower. Go Iowa. The second lauded John Lewis, my local rep and long-time hero of mine, for switching his support to Barack. What took him so long, I don't know.

Well, plenty of other states have fallen suit. There's momentum. Fantastic momentum (excepting dumb Texas, Ohio, and Rhode Island, the new "Midwests" for me).

Just when you thought the train was headed in the right direction...

Barack tosses a huge ol' shovelful of coal into the furnace and the train levitates off of the tracks, turns skywards, Autobots itself a bit, turns into a freakin Millennium Falcon and jumps to lightspeed.

See one of the best speeches ever here: A More Perfect Union

Subscribe to:

Comments (Atom)